Regression and Classification

On 30 November, 2020 there was an e-GL on the topic ‘Regression and Classification’ by Ms. Divya Chaurasia, working as a Consumer Insight Specialist at Google. She explained the working of supervised and unsupervised machine learning model with an example each. Supervised machine learning algorithm knows the desired output. Both Regression and Classification are a part of Supervised Machine Learning algorithm. The only difference is that Classification is for discrete variables meanwhile Regression predicts output for continuous variables. Classification is the systematic grouping of units according to their common characteristics. ‘Will it rain tomorrow?’ is an example of classification. She explained the difference between Clustering and Grouping, the main difference being clusters don’t have labels.

She then talked briefly about the different types of classifiers like Linear classifiers, Tree-based classifier: decision tree classifiers, Support vector machines, Artificial neural networks, Basin regression, Stochastic gradient descent (SGD) classifier, Gaussian Naïve classifier and Ensemble methods including RandomForest and AdaBoost.

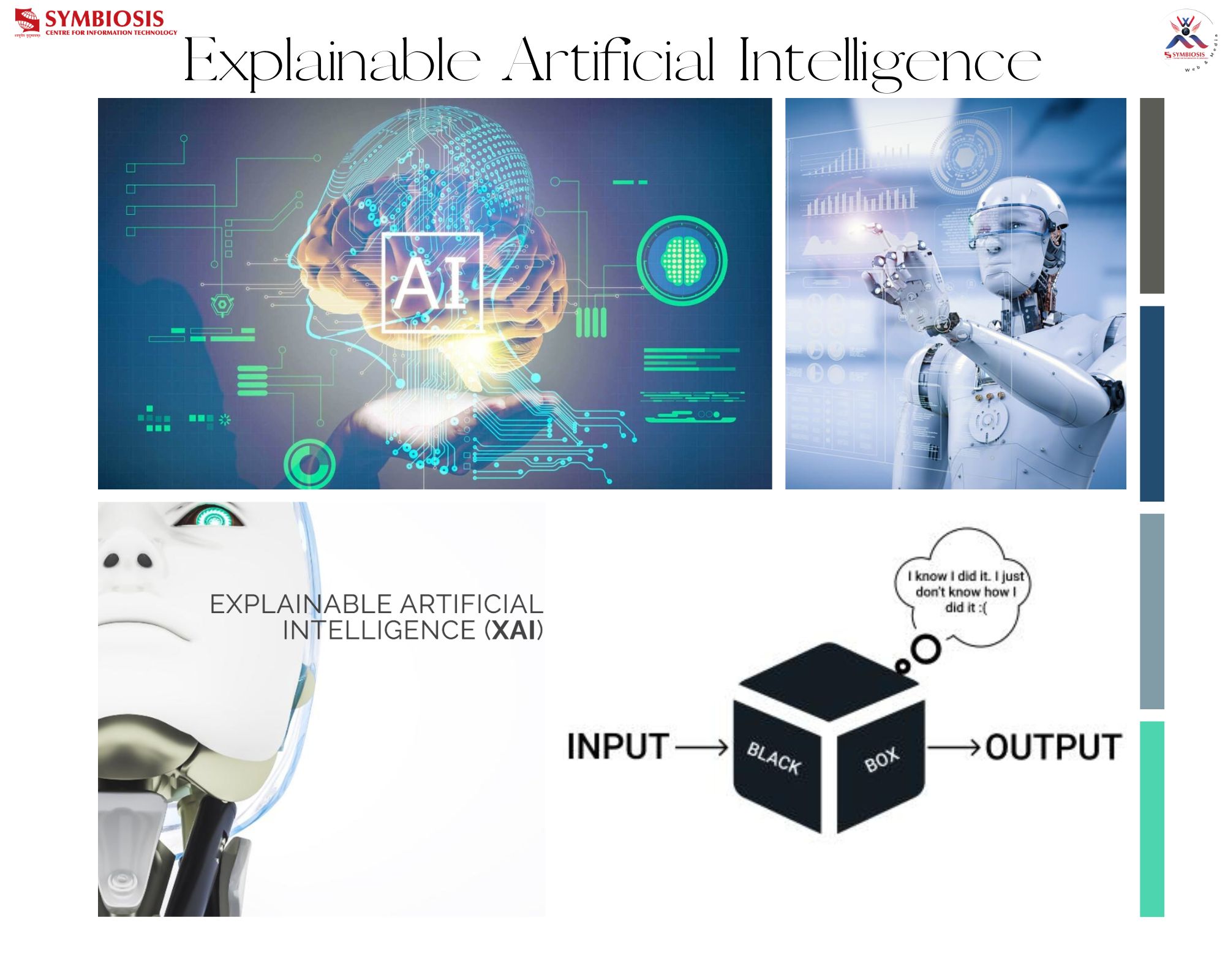

She briefed about different types of regression like linear regression, logistic regression, ridge regression, lasso regression, polynomial regression and Bayesian linear regression. The speaker focused on linear regression and logistic regression which are basic and understood by majority of the clients. A Logistic Regression gives the probability and Linear Regression gives the value. Both are well proven algorithm and not black box algorithm. She next spoke about linear regression like single linear equation and multiple linear equation. Logistic Regression is actually a linear classification problem because it gives the probability.

As we increase the complexity of the model the computer’s computation power increases and the control is lost on the parameters which determines how the model should behave.

To know the revenue on advertising spends prediction using advertising data linear regression can be used. For spam detection logistic regression can be used.

The speaker elaborated on confusion matrix consisting of true positive, true negative, false positive and false negative giving examples related to a student getting admit based on GRE score.

The speaker then focused on two important parameters: the sensitivity and specificity. Sensitivity tells whether one is able to capture all the true positives or not. Specificity tells us whether one was able to capture all the true negative or not. It’s a risky call if a person has cancer and the model shows false negative, predicting he won’t get cancer even though he is highly likely of getting cancer. In such cases to increase the specificity all the type 2 errors need to be reduced.

The speaker ended the discussion with business cases for Regression and Classification. One of the interesting business case study was how Ensemble Regression is used to predict sales for one of the largest technology client in the USA in marketing spends on print, digital ad campaigns.

The session ended by intrigued students clearing their doubts which the speaker answered graciously.