Federated Learning – The concept of training machine learning models across decentralized devices.

Machine learning is a powerful tool that plays a crucial role in harnessing technologies related to artificial intelligence. It is often referred to as AI because of its ability to learn and make decisions, even though it is technically a branch of AI. Initially part of AI evolution until the late 1970s, ML then branched off to develop on its own. Today, ML is a significant tool in cloud computing and e-commerce and is widely utilized in cutting-edge technologies.

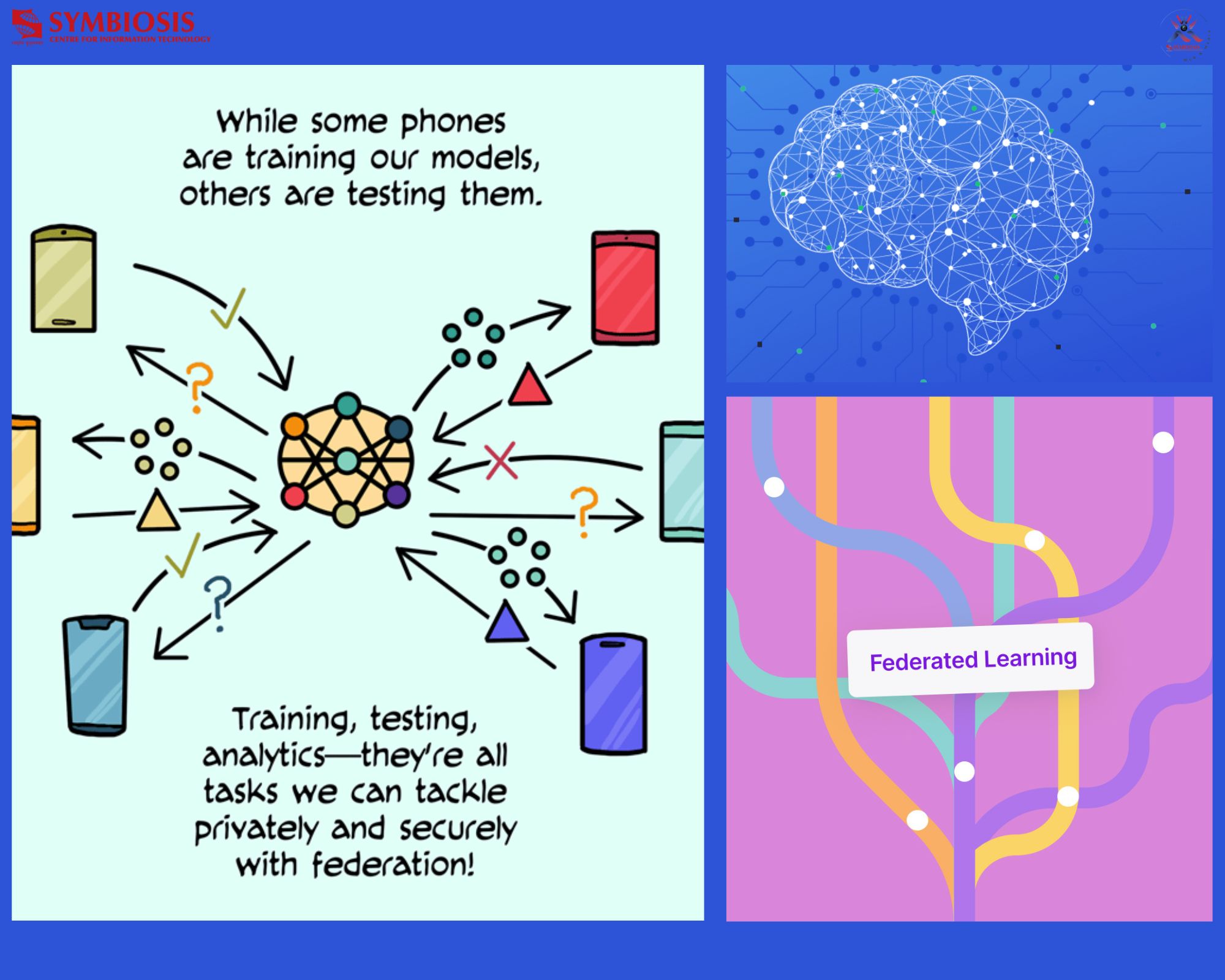

Federated learning, also known as collaborative learning, is a specialized ML technique that trains algorithms through multiple independent sessions, each using its own dataset. Imagine federated learning as a cool way for computers to learn without sharing all their secrets. It is a special way of teaching them. Usually, computers learn in a big class where everyone puts their information together. But in federated learning, each computer has its own special notes and learns on its own. They share some of their ideas with each other to create a super-smart plan.

Let us say we have a bunch of friends, and each friend has a notebook with their favorite things written down. Instead of giving away their notebooks, they share some details about their favorites with each other. This helps everyone learn more without saying everything. So, in computer terms, federated learning is like having many computers in different places. They each have their own data, and instead of sending the data to each other, they just share a bit of information about what they have learned. This way, they can all get smarter without giving away all their secrets. It is a cool way for computers to work together and become clever. There are three types of federated learning. They are centralized federated learning, decentralized federated learning, and heterogeneous federated learning.

Federated learning has various use cases, including applications in transportation (such as self-driving cars), Industry 4.0 (smart manufacturing), medicine (digital health), and robotics. One notable use case is in mobile keyboard next-word prediction. Here, the goal is to train a model that predicts the next word a user is likely to type without sending any user data to the cloud. Each user’s phone downloads a shared model, and as the user types, the phone collects data on word combinations. This data is then used to train a local copy of the model. The updated model parameters are sent to the server, which aggregates them from all users to create a new shared model that is distributed back to all users. In simple terms, imagine when you’re typing on your phone and it suggests the next word you might want to use. To make this prediction really smart, your phone learns from everyone’s typing habits without sharing what you’re actually typing. Your phone downloads a shared model that helps it predict words, and as you type, it learns from your habits locally. This information is then sent to a central server, where it combines insights from everyone’s typing to improve the overall prediction model. The server then sends back an updated, smarter model to all users’ phones, making the next-word predictions better for everyone, all while keeping your specific words private.

Looking ahead, the future of federated learning seems promising, with potential applications in areas such as COVID-19 detection, self-driving cars, and energy efficiency. As new technologies continue to evolve, and with increasing concerns about privacy, federated learning is positioned to play a crucial role in the rapidly advancing field of machine learning, allowing the training and deployment of models without compromising data privacy.