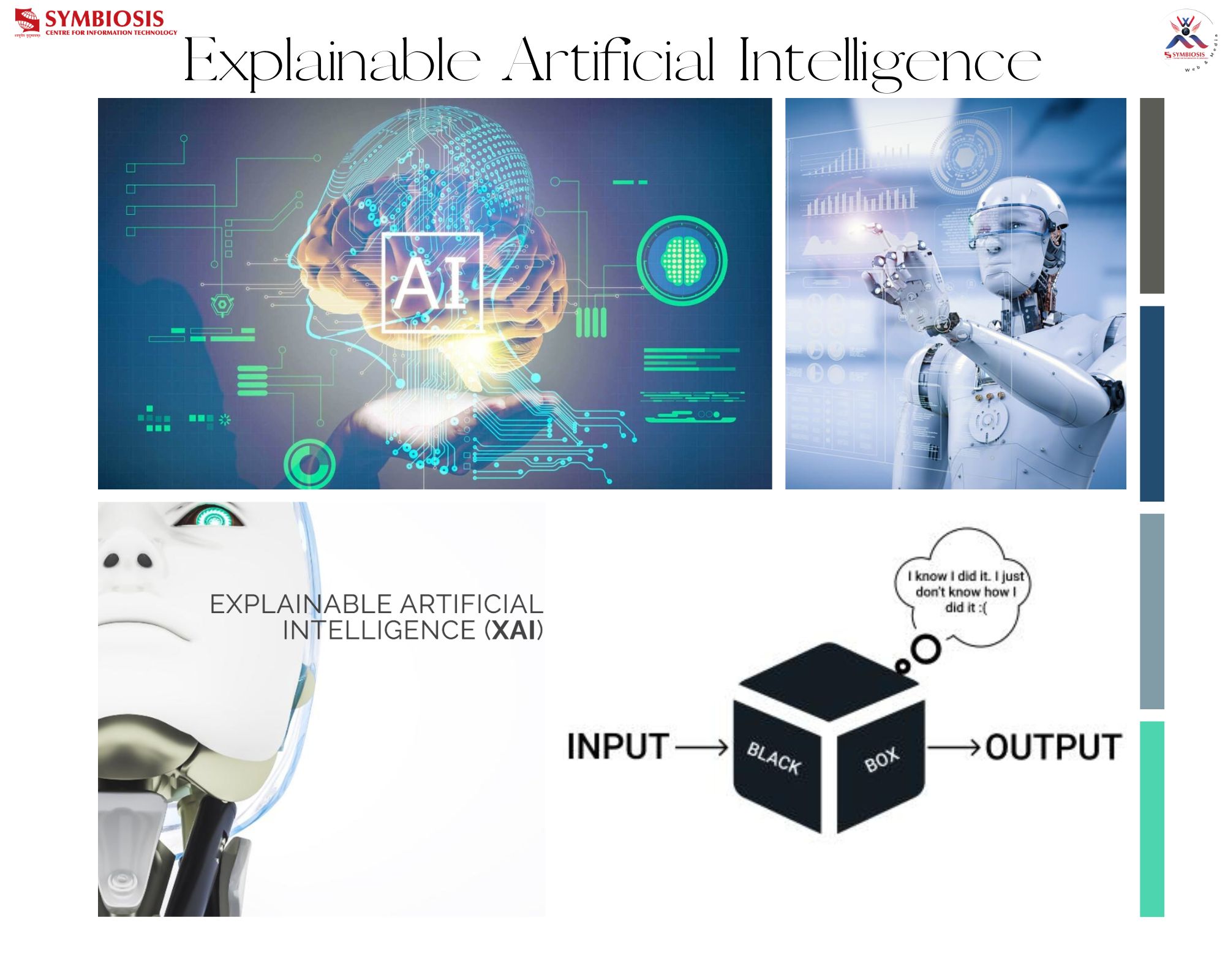

The Significance of Explainable Artificial Intelligence (XAI) in Critical Applications

Explainable Artificial Intelligence (XAI) is a crucial element in artificial intelligence, ensuring transparency and interpretability in machine learning systems. As AI becomes increasingly integrated into our daily lives, the need to comprehend and trust the decisions made by these systems becomes paramount.

XAI addresses this challenge by introducing transparency and interpretability into AI, especially in critical domains like healthcare, finance, and autonomous vehicles, where understanding AI decision-making is essential. Techniques involve using simpler models, analyzing different features’ importance, and generating predictions’ explanations. The goal is to bridge the gap between complex AI algorithms and human trust and understanding.

Imagine your computer making brilliant decisions, aiding doctors in diagnoses, or assisting banks in loan decisions. However, these decisions are often like secret codes, where XAI acts like a light in the dark computer room, revealing what is happening.

In hospitals, XAI helps doctors understand why computers suggest specific treatments, fostering collaboration between man and machine. In finance, it ensures that decisions about loans or detecting fraud are understandable and trustworthy. XAI becomes a superhero sidekick, ensuring computers are not mysterious but explain their actions.

To make these intelligent computers more understandable, scientists use tricks, like simpler models and analyzing decision factors. XAI is like conversing with an intelligent robot buddy, explaining why it makes confident choices, much like discussing your favorite ice cream flavor with a friend. In simplifying the complexity of AI, XAI fosters trust and comprehension in the decisions made by these intelligent systems.